Do ERP Ecosystems Act Like Cartels?

While the ecosystems formed by ERP and database vendors, systems integrators, and other partners can create value, there can be downsides as well. Here are five things you need to know about ERP vendors. Over the decades, large ERP and database vendors, system integrators, and other partners have formed an ecosystem to help customers deploy […]

With Oracle Sustaining Support, What You Don’t Know Can Hurt You

Oracle claims their Lifetime Support Policy lets IT organizations “enjoy continued peace of mind,” but that may only hold true if you’re committed to staying current with major product releases. If your software version is five years or older, you’re more likely to feel you’re going out of your mind when you discover the true […]

Managed Services Projections Increase Through 2023

Spiceworks Ziff Davis (SWZD) surveyed more than 1,400 IT professionals from North America, Europe, Asia, and Latin America to determine how recent economic events and inflation are impacting organizational plans to invest in technology in the upcoming year. The results of that research formed the 2023 State of IT report. In this report, the news […]

Technology Productivity: Applying the Deep Work Model

In the recent Harvard Business Review article, “Beware of a Culture of Busyness,” author Adam Waytz describes how corporate culture has evolved from valuing what is produced to valuing how busy employees are. In this article, we examine how overvaluing time affects businesses and how applying the deep work model can help increase technology productivity. […]

Unleash Your IT Potential with Rimini ONE™ All-in-One Support and Services

In today’s competitive business landscape, organizations rely heavily on their enterprise software systems to drive productivity and enable growth. However, some vendors provide only limited levels of support for existing versions. As a result, many companies find themselves struggling to: keep up with the associated costs and complexities of maintaining their software investments source high-quality […]

What Oracle’s JAVA SE Licensing Change Could Mean to You

Is the JAVA SE licensing change as simple as it sounds? When software vendors claim to be simplifying software licensing terms, experienced enterprise customers know to dig into the details to find how it may impact their costs. So, when Oracle in January revealed “a simple, low-cost monthly subscription” model for licensing its Java SE […]

High Heels, High Tech, and High Expectations

A Blog for Women in Leadership Run the race and finish strong. That is the motto that I have lived by in my professional, personal, and spiritual endeavors for many years. For me it is a key foundation in my pursuit to be a Servant Leader, which represents a management style attuned to today’s often […]

Does Your Global Compliance Program Have Teeth?

Global compliance can be tricky At the end of 2022, the Department of Justice (DoJ) ordered a large U.S based conglomerate to pay over $160 million after they made bribe payments to a high-ranking official at Brazil’s state-owned oil company to secure an advantage when bidding on a major $425 million contract. The DoJ found […]

Strategies to Help Win the Fight Against Breast Cancer

The fight to find a cure The fight to increase awareness of and find a cure for breast cancer is ongoing.. To support these goals, Rimini Street’s Team Pink and Nancy Lyskawa, Rimini Street EVP of Global Client Onboarding, hosted two webinars featuring speakers from the nonprofit Living Beyond Breast Cancer (LBBC) that provided information […]

Privacy Compliance is a Partnership

Privacy compliance is a critical priority for any organization, as it plays a crucial role in protecting the personal information of customers, clients, and employees. With the increasing amount of personal data being collected and stored by organizations, it is more important than ever to have robust privacy compliance policies and procedures in place to […]

Do ERP Systems and Saab Cars Share the Same Interoperability Issues?

Product marketing thrives on analogies. So, it’s not too surprising that I was struck by a great analogy while driving home from our Pleasanton, CA office, in my new car – well, it’s a new-to-me vintage 19-year-old Saab – after a product session about Rimini Connect™ and interoperability. Avoiding the Bay Bridge (thanks Waze!), I […]

How CISOs Put the X Factor in Being a CXO

The job of a chief information security officer, or CISO, is in many ways like that of other CXOs. Like their CXO peers, a CISO will have a strategic agenda that is aligned with that of the business. Within that agenda, a CISO will also have specific priorities, often strategic in nature and aligned […]

Economic Uncertainty is Causing CIOs to Hoard Their IT Budget

The uncertain economic outlook is causing some CIOs to defer spending and push out less-critical projects during the second half of the year. That was one key takeaway from a summer software market update presentation by J.P. Morgan analyst Mark Murphy. How CIOs balance dwindling budgets Almost 40% of CIOs surveyed by J.P. Morgan in […]

Open-Source Database Market Roundup

The lock that long-time proprietary vendors have had on the database management system (DBMS) market is steadily eroding, even in the face of astounding industry growth. Overall, revenue growth is extraordinary for the industry in total. “Since its brief pause in 2015, the DBMS market has reeled off six consecutive years of growth, with the […]

9 Open-Source Database Migration Best Practices

Deploying open-source database management systems (DBMSs) can deliver unique benefits to enterprises, including lower costs, greater flexibility, and innovation not always available with proprietary database solutions. However, migrating from an existing proprietary product to an open-source alternative requires planning and attention to ensure the process doesn’t go awry. The appeal of open-source licenses starts with the cost—free—and […]

Solving Your IT Talent Shortage: Upskill or Outsource?

The global tech talent shortage is real and caused by many factors, including: High demand for digital transformation The pandemic The great retirement/the great migration/the big quit Talent retention challenges Newer technologies and more fun at other companies As organizations worldwide try to crack the code on their skills gaps, how will you solve your […]

How to Overcome the 7 Toughest Multi-Cloud Challenges

Transitioning from locally hosted data centers to the Big 3 cloud providers is not a hypothetical “if” for most enterprises but a reality of “when.” The stability, security, and scalability of services like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) can solve many problems for IT leaders. But taking on these […]

IT Succession Planning: How to Prepare for the Inevitable

The Great Resignation. The Big Retirement. In November 2021, 4.5 million workers left or changed their jobs. On one end are the Baby Boomers who have critical skills and possess business knowledge that’s scarce in today’s job market. These skills and knowledge form the backbone of many businesses that have run on Oracle and SAP […]

Overcoming Salesforce Challenges: Unblocking the Top 5 Roadblocks

Salesforce has the potential to transform your business. However, SaaS can sometimes be misunderstood as simple — fast to set up and easy to manage and maintain. In reality, that couldn’t be further from the truth. Misunderstanding the complexity of a SaaS platform as powerful as Salesforce can lead to a lower ROI than expected. […]

4 Quick Wins to Drive Success for Your ERP Consolidation Project

CIOs: All Eyes are on You An ERP consolidation is no picnic. And it’s certainly not fast. It can be long and complicated, with the potential for stakeholders to lose interest and abandon ship. Add to that the severe lack of skilled ERP talent able to do the heavy lifting of integrating and decommissioning servers, […]

Guest Blog Executive Client Series: Delivering for the Business, Part 1

Part 1: Manufacturing in 2022: Building Business Resiliency and Funding Innovation, an Interview with Bas Ursem How does a global manufacturer manage the impact of a global crisis? Bas Ursem, vice president, global IT at Kraton Corporation, a manufacturer of biobased chemicals and specialty polymers, paved a smart path to help his organization survive — […]

Going Digital – Digital Transformation in Manufacturing

Digital transformation in manufacturing is a top priority, but funding obstacles exist. Manufacturing IT budgets could hold the key to finding the funds to drive the future of manufacturing. According to the World Economic Forum, more than 70% of manufacturing companies are stalled at the first steps of digital transformation, unable to move past the […]

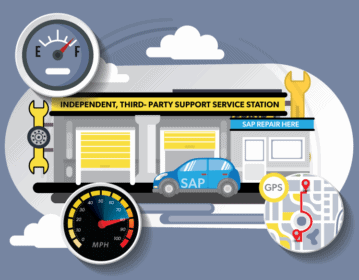

Third-party Support for SAP: The Gift That Keeps on Giving

Hand SAP your software support cancellation notice before the September 30 SAP deadline and give yourself the gift of improved ERP support quality this year — and every year. 2020 was a unique year for all, as the world grappled with COVID-19 and the impact it had on personal and professional lives. The pandemic […]

Creating A Customer Experience Devoid of Downtime

Third-Party Maintenance for SAP Helps T-Mobile Enhance Customer Experience and Reliability “Remaining connected to family, friends, and customers is incredibly important, especially in these times” states Erik LaValle, senior director of product and technology at T-Mobile. “Our customers need to know that they can rely on us 100% of the time.” The host of […]

Retail IT Trends: Retailers Continue to Lead Digital Transformation, Post-Pandemic — And by Necessity

CIOs: Note These 7 Retail Trends as You Advise the Business on IT Investments The COVID-19 pandemic significantly disrupted the world of retail necessitating accelerated digital transformation. Retailers around the globe were forced to rapidly reassess business models and operations to serve a customer base that was newly offsite and hands-free. Like many retail IT […]

Global Survey Results: CFOs Weigh in on Enterprise Digital Transformation and IT Spending Priorities

When it comes to helping right the ship in times of business turbulence, CFOs play a critical role. They partner closely with the CEO to refine business strategy and with the CIO on implementing enterprise digital transformation to quickly defend against the disruption. An important component of the CFO/CIO partnership during crisis management is prioritizing […]

IDG Survey: Navigating the Future of SAP

New IDG survey report explores how IT leaders are accelerating innovation while maximizing SAP investments IT leaders are feeling pressure on all sides. The business wants IT to accelerate innovation to take advantage of technologies such as big data, AI, and IoT. The budget dictates a fine balance between maximizing ROI from existing IT investments […]

Weathering the Winter with the Founder of Winter Streets Project

Hi! I am Lou, Founder and Director of Winter Streets Project Five years ago, I had the idea of, instead of buying Christmas presents for my family and friends (who would only put them on eBay in January), I would do something to help those living on the streets. After a quick Google I had an […]

Licensees Extending Life of PeopleSoft ERP with AMS, IaaS, and Third-Party Support

2020 Survey Results: Analysis of Global PeopleSoft Licensee Behavior Many organizations are spending too much of their IT budget ─ as much as 90% ─ on daily operating costs to run and grow the business, leaving only 10% to fund transformation.[1] Continued overspending on expensive Oracle annual maintenance contracts for PeopleSoft ERP, while following the […]

3 Strategic Takeaways from the Recent SAP Q3 Earnings Call

And how they can inform your SAP roadmap On October 25, SAP released its third-quarter results. Said to be its “biggest rout in 24 years,” SAP Q3 earnings reveal a weak quarter with significant drop in cloud and license revenue compared to its projections. This, despite an uptick in the industry overall, with the increase […]

Third-Party Software Support for SAP: Hidden Savings

The oft-quoted Michael Porter has stated that the essence of strategy is knowing ‘what not to do!’ ─ it is as important as knowing what to do. How does this apply to maintenance for SAP and IT roadmap strategy? In a November 2019 survey conducted by Rimini Street, 79% of licensees said that they have […]

Third-Party Support: A Strategy for IT Agility, Not Just a Cost-Cutting Measure

The financial benefits of dropping vendor-sourced maintenance in favor of third-party enterprise software support are very tangible; the lure of cutting expenditures by 50% – for what are commonly agreed to be superior levels of service – has proven compelling to thousands of companies. However, for many CIOs, the transition to third-party support presents advantages […]

5 Questions on S/4HANA Migration — Answers for SAP Business Suite Licensees

SAP’s extension announcement of ECC 6.0 support from 2025 to 2027 is an apparent acknowledgment that SAP customers do not see value in a prompt migration to S/4HANA. Even prior to the pandemic, nearly 80% of SAP licensees planned to continue to run their current customized, mature SAP systems for five additional years or more.1 […]

The Hidden Tax that Comes with Traditional Application Management Services (AMS)

The model for traditional application managed services (AMS) is broken. It’s dated and complex with a time and materials contract structure and a “man-hours” billing system. While the model was originally provisioned for issue resolution, the reality may lead to a continuous, almost unwitting client accommodation of large and growing numbers of billable hours for […]

New Application Management Service (AMS) Models are Emerging to Address the IT Talent Shortage

This article originally appeared on Diginomica.com; it has been modified for this space. The pool of IT experts is shrinking and the talent that remains is being spread thinner as new technologies create demand for expertise. According to Gartner’s “Survey Data: Gartner Talent Management for Tech CEOs Survey, 2020“1 report: “Tech CEOs rank attracting and […]

The Best Time to Take Back Control of Your Oracle Roadmap is NOW

Many Oracle customers are currently facing a May 2020 maintenance renewal deadline that may lock them into another year of high costs, limited innovation and falling behind. IT today is facing a mandate from the business to evolve beyond a cost center to a source of innovation and growth. However, CIOs running Oracle can be […]

Overpaying for Application Management Services? It’s Not About Man-Hours Anymore

Why is outsourcing Application Management Services (AMS) so complicated, and why doesn’t it work like it’s supposed to? The AMS concept is simple: offload incidents, service requests, routine tasks, and IT backlogs so that internal teams are free to focus on game-changing initiatives. But the reality? Too many man-hours, yet nothing seems to get done; […]

5 Ways to Leverage ERP in the Cloud Without Necessarily Moving to Cloud ERP

This article originally appeared on Diginomica.com; it has been modified for this space. Everyone is moving to the cloud, no doubt about it. How to take advantage of the cloud is the multi-million dollar question. Get stampeded into making the wrong decision, and you could wind up throwing away millions. Do it right, and the […]

Overwhelmed by Opportunity, Salesforce Customers Need Assistance

Salesforce customers are drowning in opportunity – a good problem to have. In most cases, the challenge they have with Salesforce is not that it is rigid or limited, like the enterprise software of the past, but that it is so flexible and ever-evolving that they worry they aren’t extracting all the value from it […]

Two-Thirds of SAP Licensees Surveyed Not Ready For S/4HANA

Two-thirds of SAP licensees surveyed have no plans to migrate to S/4HANA, according to Rimini Street research. That’s true, despite SAP’s planned end of mainstream maintenance for Business Suite 7 and its core ECC6 ERP platform*. The deadline puts pressure on CIOs decide their SAP roadmap and strategy for the next decade and beyond. They […]

The SAP S/4HANA Skills Crunch – How Bad Could It Get?

Our colleagues in Japan recently forwarded a series of SAP user group newsletter articles where the title of the series translates roughly into, “The Depression of SAP Users.” Why are they depressed? Likely, because they are being told they must hurry up and implement SAP S/4HANA before the deadline, when SAP says it plans to […]

Rimini Street Survey Confirms Oracle Customers Want More Savings, Best-In-Class Cloud Options

When Rimini Street surveyed Oracle customers recently and asked their top priorities, they put cost optimization at the top of the list. Yet a recent Gartner survey of CIOs 1 identified enabling growth as their top priority. What accounts for the difference? I encourage you to read the full survey report, Why Enterprises Are Rethinking […]

How To Fix Desktop Java Version Glitches With Enterprise Applications

ERP systems and other enterprise applications sometimes break when the software was written to work with a specific release of Java that is not the one user desktops are configured to use by default. What are you supposed to do when that happens? Make users revert to a version of Java that may be obsolete […]

How Welch’s Squeezed More Value From IT With Rimini Street

Because my ultimate bosses are family farmers, I do not get a lot of credit for spending money on technology for technology’s sake. Farming is a tough business, and they are right to have high expectations of the technology investments we make. Welch’s is such a well-known brand many people probably assume we’re a subsidiary […]

What Retailers Have In Common on IT Spending and Digital Innovation

The holiday season gave retailers an unexpected gift: sales rose 5.1 percent between Nov. 1 and Dec. 24, a six-year high1. To put that into perspective, the National Retail Federation put the average annual increase at about 3.9% over the previous five years. Even so, online sales accelerated even more rapidly, rising 19.1 percent2, according […]

Oracle’s Cloud Programs: Beware a Wolf in Sheep’s Clothing

The promise of the cloud is to liberate IT from the cost and burden of tiresome daily IT administration, while delivering more freedom to select from the industry’s latest and best innovations. Too bad that goes against the very DNA of legacy ERP vendors such as Oracle, whose stock price and revenues historically are closely […]

With ERP Support from Rimini Street, Multnomah County Gains Flexibility While Making Best Use of Taxpayer Dollars

At just 465 miles square, Multnomah County in Oregon is home to 800,000 residents. With the county seat in Portland, the organization employs 8,000 people and has an annual operating budget of $2 billion USD, funded by taxpayers. Multnomah County is responsible for its operating units and for securely maintaining its systems to fulfill certain […]

ESCO Rides the Wave of Economic Change with Help from Rimini Street

In today’s dynamic global economy, organizations in nearly all sectors are subject to shifts in the marketplace, experiencing periods of high growth as well as slumps. If your organization is like most, when a downturn occurs, you may start to think about trimming operational expenditures. Often, one of the largest budget line items, aside from […]

Five Steps for Bringing About Change in IT

IT leaders are upbeat for the most as they continue to move ahead with digital transformation efforts. It’s not that the budget spigots are opening wide – for most, spending and hiring rates are flat. “One of the biggest reasons for the hopeful outlook is the fact that business and IT are finally on the […]

Taking Control of Your Software Licenses in the Cloud Era

Software licensing is like a devil’s pact. Enterprises fund and commit to continue funding software development costs in exchange for the promise of ongoing support and, hopefully, functionally rich upgrades. Over time, however, CIOs often end up cursing the growing costs of supporting mature-but-critical applications and having to pay for forced upgrades they don’t always […]

5 Reasons to Kill (or Rethink) a Pilot Project

Once they get a foot in the door, enterprise software vendors will predictably start pushing pilots of their latest and greatest wares, from hybrid cloud to in-memory databases. For customers, testing these extensions and enhancements can make sense, provided there’s a strong business case, supportive leadership, and the right success metrics. Innovative organizations are always […]

Video: How to Fix ERP Browser Compatibility Issues

Solving web browser compatibility issues can be a surprisingly big issue for any IT organization trying to maximize the useful life of its ERP and other enterprise systems that came of age early in the web era. Wasn’t browser-based computing supposed to make everything easier? If your current ERP system meets your needs, we don’t […]

Maximize Your Opportunity within the Cloud

One of the most appealing aspects of cloud computing is the promise — in theory at least — that if you’re not happy with a vendor, you can migrate to another with much less pain than with traditional enterprise solution providers. Today, CIOs have an almost bewildering choice of cloud applications and cloud platforms. This […]

Digital Transformation: Funding the Ongoing Journey

If a technology solutions salesperson walks out of your office without playing the “digital transformation” card, he or she is not likely long for that job. Vendors, whether through strategic vision or competitive necessity, have latched onto the aspirations and concerns of business customers who fear digital disruption. “Digital transformation rocketed to the top of […]

Why Everyone Wants to “Help” with GDPR Compliance

With the May 25 deadline for compliance with the European Union’s General Data Privacy Protection Regulation (GDPR) rapidly approaching, interest in the topic is at a fever pitch. The only bigger news will come when the first company gets slapped with a big fine for failing to adequately protect personal privacy under the law. Repeat […]

Don’t Allow SAP Indirect Access Fees to Block Innovation

Much of the digital business innovation taking place today involves reaching beyond the transactional systems at the core of a business to create new user experiences. Unfortunately, some SAP customers have discovered that creating a new user experience front end to an SAP back end can land them in hot water, if SAP decides that […]

Why SAP Will Likely Extend ECC6 Maintenance Beyond 2025

*On February 4, 2020, SAP had announced mainstream support for SAP Business Suite 7 until 2027 We’ve seen it happen before and we will probably see it happen again. Within the next two years, it is increasingly likely that SAP will extend the maintenance window for ECC 6/Business Suite beyond 2025*. In 2014, SAP announced […]

How Innovative SAP Customers Tackle Performance and Interoperability Issues

Many SAP customers want to continue to run their core system of record for the foreseeable future. But performance and interoperability challenges are growing as technology environments change amidst rapid transitions to diverse hybrid IT environments. These rapid changes can have a significant performance impact on SAP systems including new software and servers, upgrades to […]

Maximizing Value from Your SAP Business Suite Investments

Over a period of years, you’ve likely invested millions in an SAP platform that today is robust, stable and core to running your business. Like any good investment, your ECC 6.0 system and other Business Suite applications should yield strong dividends for years to come. In the context of IT, that means delivering the efficiency, […]

Why Is Cloud Confusing? It’s Deliberate.

Major enterprise software vendors like Oracle and SAP are aggressively promoting “cloud” however chronic overuse of the term “cloud” may be creating common misconceptions. There are essentially two distinct cloud models, with very different characteristics, that are frequently confused because they are both generically called “cloud”. The ongoing blurring of the term “cloud” can disadvantage […]

Plan Ahead as the Cost of SAP and Oracle DBMS Runtime Skyrockets

As what was once a mutually beneficial partnership between SAP and Oracle grows increasingly contentious, customers may find themselves caught in the middle. One symptom: the price for getting an Oracle runtime database license bundled with SAP Business Suite is now more than double what it was three years ago. As a result, customers covered […]

Cut IT Support Costs to Drive Digital Business Initiatives

Does your IT organization eagerly embrace digital transformation, or quiver in fear at the prospect of it? If the former, you’re following the model of world-class IT organizations that run more efficiently and devote a larger portion of their process costs to new business-focused initiatives. That’s the takeaway from a study by Hackett Group, as […]

What You Need to Know to Exploit the Cloud

As digital transformation shakes up traditional business models, organizations of every size, in every industry, are exploring better ways to keep pace with change. Adoption of cloud computing technology has significantly increased over the last few years, promising a great opportunity for innovation amongst businesses if approached correctly, selectively and at the right pace. And […]

Don’t Trip on the Cloud Migration Path

It seems like everybody is rushing to migrate to cloud applications, and you don’t want to get left on the wrong side of an inflection curve. But there are pitfalls for hasty cloud migration, so it’s important to sit back and fully analyze what you’ve got and where you want to go. Early in 2016, […]

What’s the State of the CIO?

The life of a CIO? It’s complicated. That’s the conclusion of CIO Editor in Chief Dan Muse, introducing the publication’s 2017 State of the CIO report. “Moving apps and workloads to the cloud, ensuring legacy software can talk to off-premises apps, and keeping networks and systems secure remain core functional tasks of the CIO role,” […]

Is There Hesitation to Commit to SAP S/4HANA?

Most SAP licensees plan to continue running their proven, SAP ERP releases given its rich functionality that more than meets their business needs without the cost, disruption and risk of migrating to S/4HANA. This was the top finding in a recent global survey conducted by Rimini Street, selected by 89 percent of the survey respondents. […]

Revealing the Risks at the S/4HANA Crossroads

As SAP continues to raise its bet for S/4HANA, SAP customers find themselves at a crossroads. Is it time to migrate to SAP’s new application, or is a wait-and-see approach the best option? More than two years after SAP launched S/4HANA amid considerable fanfare, user adoption has been underwhelming. In the UK, just 5 percent […]

The Evolving Role of the CIO in the Digital Age

For the longest time, the role of the chief information officer was set in stone. The job was all about keeping the technology “lights” on and, if possible, reducing costs. Today that job description is outdated. In the era of big data, apps and cloud computing, the role of today’s CIO is to enable the […]

Achieve the Best of Both Worlds with SAP and Hybrid IT

SAP customers have been at the forefront in embracing hybrid IT. This best-of-both-worlds approach lets them preserve and continue to leverage investments in mission-critical SAP ERP. At the same time, best-of-breed cloud applications help meet demands for greater business agility. A study by the IT software management vendor SolarWinds quantified what was widely recognized across […]

How Companies Are Accelerating Business by Innovating Around the Edges of ERP

As the pace of business change accelerates, businesses are seeking to transform IT to pull out in front of competitors. But you’ve already invested considerable people, time and money in implementing and customizing your ERP technology. How do you keep pace with change and continue to deliver new capabilities, while minimizing risk and ensuring core […]